Hi.

Welcome to my portfolio. I document my work in product design and user experience here. Hope you have a nice stay!

Welcome to my portfolio. I document my work in product design and user experience here. Hope you have a nice stay!

This case study summarizes four experiments for Vivian Health. This work resulted in an increase in the number of reviews by over 1,000% while also increasing the quality of reviews by 7.2%.

My Role

I led Product Design for the Vivian Health Explore pod for 2.5+ years. I collaborated with a Product Manager, Researcher, Engineering Manager and two Engineers. The Explore pod use Vivian-generated and user-generated content to provide unique career insights for clinicians at any stage of their job search or professional journey.

Timeline

This work was completed over two quarters, with downtime between iterations to reach statistical significance. My time was 1/4 dedicated to this pod during this time.

Process

Problem

Candidates seek recommendations from their peers on where to work but we do not have enough facility reviews from health care professionals to support that research, leading to candidates seeking information off platform.

Much like how we would do our research about the culture of a company before joining, clinicians actively seek out information about the facilities they’d be working at. The sort of information they’re seeking includes information like nurse:patient ratios and the charting system used, to more subjective insights like what the unit culture is or the patients they typically get. We can provide this information through reviews.

Supporting Research

We spoke with 4 health care professionals in the previous quarter about leaving reviews, review content, their nursing community, and how candidates do research when job searching.

Goal

Overall, our pod focus is to create a foundation to collect user-generated content, providing the most up-to-date and valuable insights into the facilities/agencies our clinicians have worked at, and want to work at. This content powers Explore experiences in our hub-and-spoke content model.

Specifically for this work, our goal is to increase the number and quality of facility reviews left by candidates (users).

Top Level Success Metrics

1. Increase monthly facility reviews captured from 500 to 1,500

2. Improve total high value facility reviews from 15% to 20%

Strategy

We decided to focus on quantity first, as the minimum data we receive from a review is a 0-5 star rating and is still useful information. Then, we would focus on improving the review quality.

Existing Experience

The previous quarter, I cleaned up the suite of review flows to prepare for these tests.

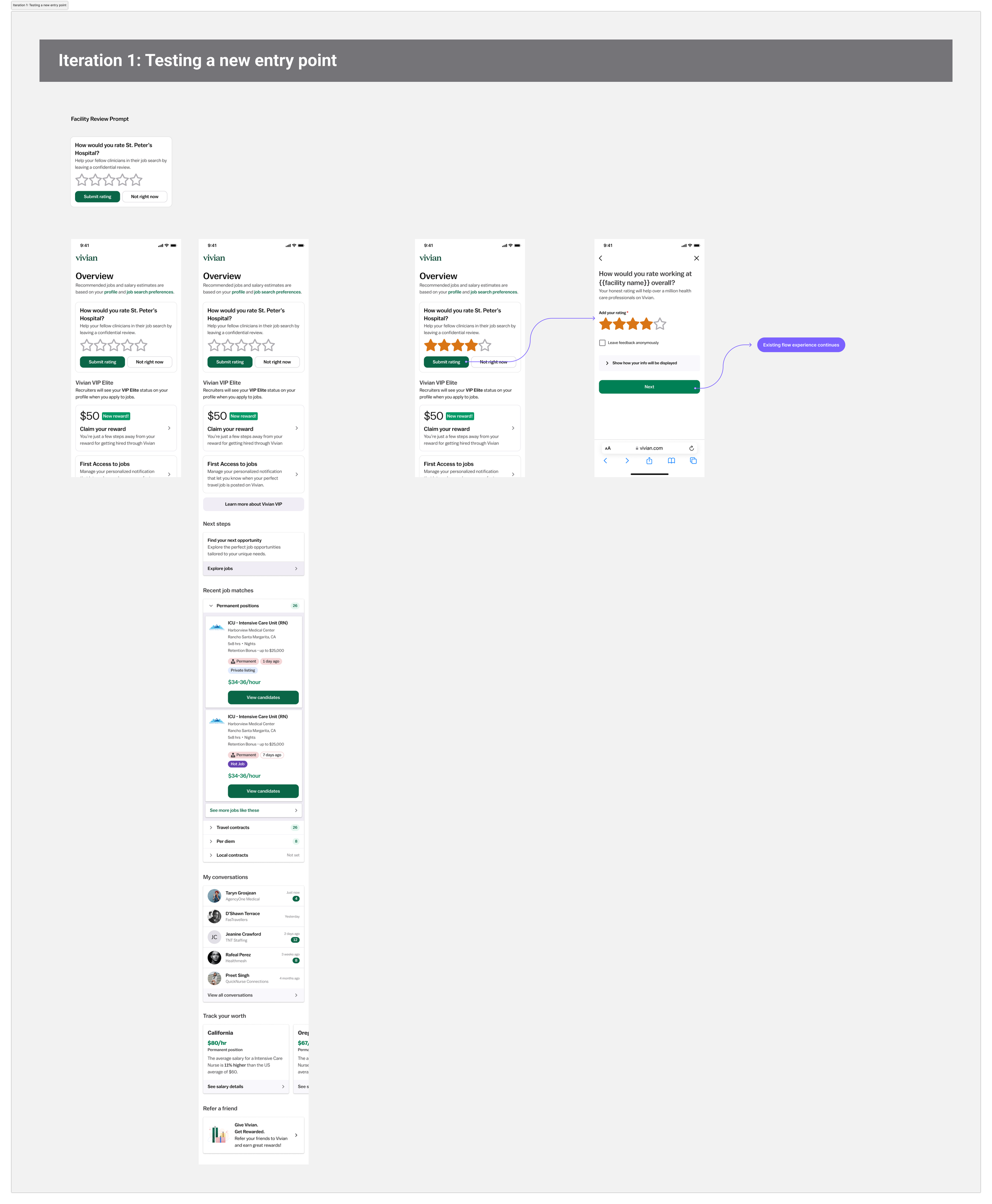

Iteration 1: Testing a new entry point

Strategy

In the previous quarter, we partnered with the communications team to test an email campaign as a new avenue to capture reviews. The results of that test (very few reviews given) told us that email was not the way to engage users for this task.

My PM and I decided to swing big and utilize the top position on our home/dashboard experience in the native app. We knew from data that our most engaged users are on native, and meeting them where they are felt like our best chance at success.

Ideation

I started from the current dashboard experience and tried to utilize existing patterns. I explored a few different presentations and copy combinations. When I reviewed these options with my team, we collectively agreed that prompting for the star rating in the module would be the most visually interesting while also getting us data quickest.

Final Design

The final design we tested is below. We decided to add a secondary CTA to capture lack of interest “not right now,” for this test the CTA would not go anywhere and only provide us another data point and future learning.

Results

We saw an immediate uptick in reviews created and decided to productize the flow.

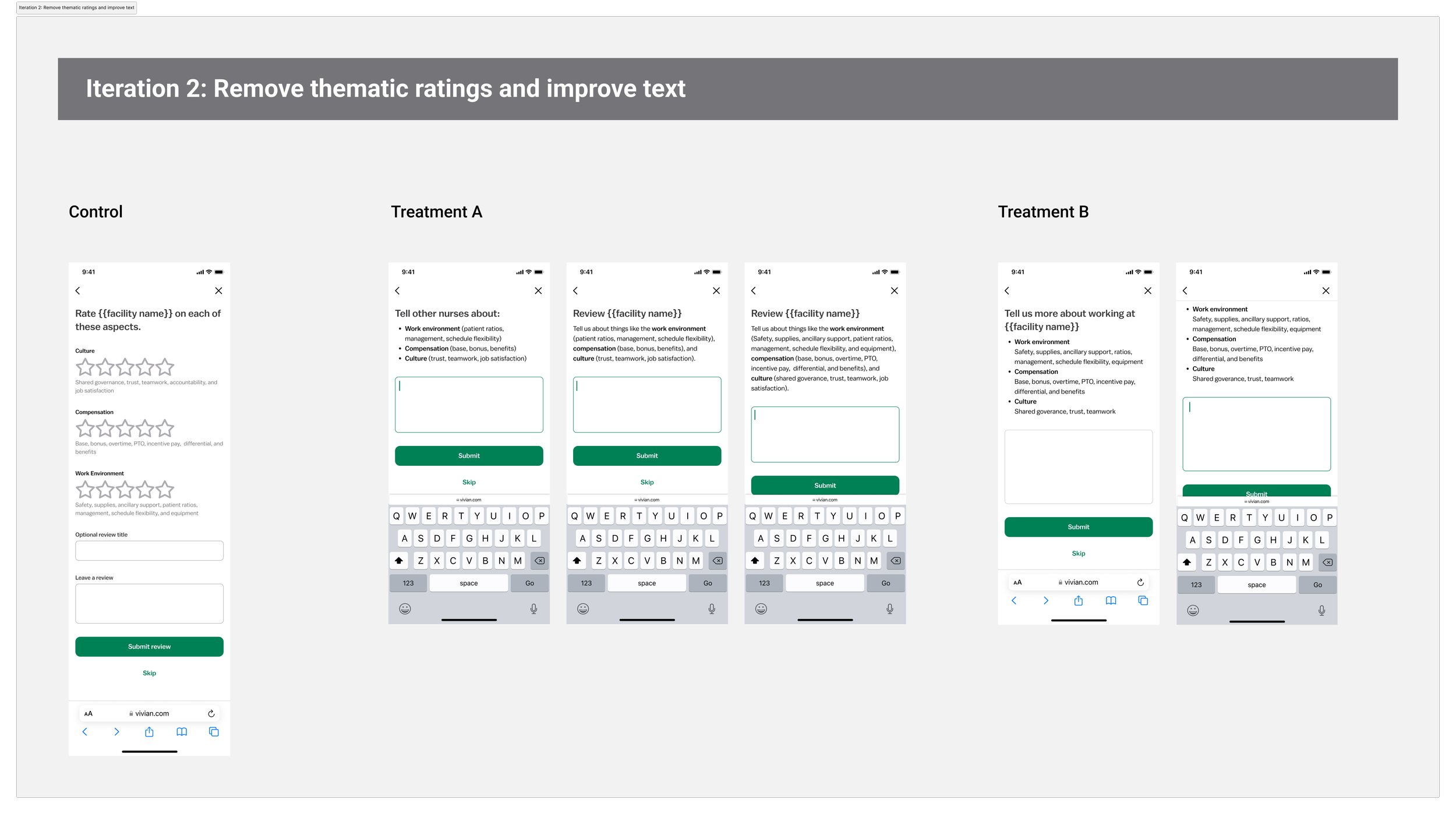

Strategy

We then moved to the next step of the flow, which was a collection of thematic ratings. We knew this was already a point of drop-off, and that our users preferred more detailed information instead of the star ratings. We wanted to see if we could optimize for improved flow completion while still capturing details and improving review quality (defined by reviews with text).

Variants

Control - No change (Existing thematic rating capture)

Treatment A - Remove “Thematic rating capture page,” and update free text input’s instructions (paragraph form).

Treatment B - Remove “Thematic rating capture page,” and update free text input’s instructions (list form).

Results

Both treatments A and B resulted in higher flow completion. Of the two new options, treatment B with list form instructions had the highest completion rate.

Insights

The original flow assumed that a star rating input would result it more details than a free text input alone. This was proven incorrect, as users were more likely to complete the free text input than the star ratings.

In addition, a list presentation of content was preferred.

Strategy

Next, we focused on further improving the quality of reviews through capturing feedback in a thumbs up/down format and iterating on the text input.

This iteration could have been split into two tests for cleaner results, but we decided that the feedback capture on the review could easily be removed if we saw low to no engagement across variants.

We would capture whether or not the user would work at that facility again. We also decided to add this pattern to the review display to capture if the review was helpful or not, and get signal on our definition of “quality.”

Variants

Control - Treatment B in Iteration 2, with repeat work question

Treatment A - Breaking input into a Pros and Cons section

Treatment B - Doubling down on the “list” format with more, shorter strings of text

Results

Treatment A outperformed both the control and treatment B. We had engagement with the repeat work question across the variants and decided to keep it.

Next, we applied these learning across the other review capture flows, and updated the review display.